SDM assessment and prediction

RStudio project

Open the RStudio project that we created in the first session. I recommend to use this RStudio project for the entire course and within the RStudio project create separate R scripts for each session.

- Create a new empty R script by going to the tab “File”, select “New File” and then “R script”

- In the new R script, type

# Session 5: SDM assessment and predictionand save the file in your folder “scripts” within your project folder, e.g. as “5_SDM_eval.R”

1 Introduction

We have already fitted simple species distribution models (SDMs) in the previous tutorial. Remember the five general model building steps: (i) conceptualisation, (ii) data preparation, (iii) model fitting, (iv) model assessment, and (v) prediction (Zurell et al. 2020). In this practical, we will concentrate on steps (iv)-(v). We will learn how to validate SDMs, visualise the fitted species-environment relationships, and make predictions. For getting a deeper understanding of these single steps, I highly recommend studying more advanced reviews (Guisan and Zimmermann 2000; Guisan and Thuiller 2005; Elith and Leathwick 2009) and textbooks on SDMs (Franklin 2010; Guisan, Thuiller, and Zimmermann 2017).

1.1 Recap of last session: data and models

We will continue to work on the lynx example of the previous session, based on the IUCN range maps. We can quickly read the data back in:

library(terra)

load('data/4_SDM_intro.RData')Quickly set up the model according to session 4.

m_step <- step(

glm( occ ~ bio11 + I(bio11^2) + bio10 + I(bio10^2),

family='binomial', data=lynx_thinned)

)## Start: AIC=266.1

## occ ~ bio11 + I(bio11^2) + bio10 + I(bio10^2)

##

## Df Deviance AIC

## - I(bio11^2) 1 256.19 264.19

## <none> 256.10 266.10

## - bio10 1 271.31 279.31

## - I(bio10^2) 1 273.32 281.32

## - bio11 1 295.88 303.88

##

## Step: AIC=264.19

## occ ~ bio11 + bio10 + I(bio10^2)

##

## Df Deviance AIC

## <none> 256.19 264.19

## - bio10 1 272.15 278.15

## - I(bio10^2) 1 274.09 280.09

## - bio11 1 352.75 358.75summary(m_step)##

## Call:

## glm(formula = occ ~ bio11 + bio10 + I(bio10^2), family = "binomial",

## data = lynx_thinned)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -12.02724 3.05541 -3.936 8.27e-05 ***

## bio11 -0.34456 0.04350 -7.921 2.35e-15 ***

## bio10 1.47632 0.39156 3.770 0.000163 ***

## I(bio10^2) -0.04900 0.01263 -3.878 0.000105 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 444.40 on 322 degrees of freedom

## Residual deviance: 256.19 on 319 degrees of freedom

## AIC: 264.19

##

## Number of Fisher Scoring iterations: 62 Model assessment

Before we can use our model for making predictions in space and time, we need to assess model behaviour and predictive performance.

2.1 Visualising response curves

When only looking at parameter estimates, it is sometimes difficult to envision how exactly the fitted response (the “niche” function) looks like. Also, for more complicated machine learning algorithms (that we will get to know later), there are no parameter estimates to look at, so we need different means to judge the plausibility of the fitted response.

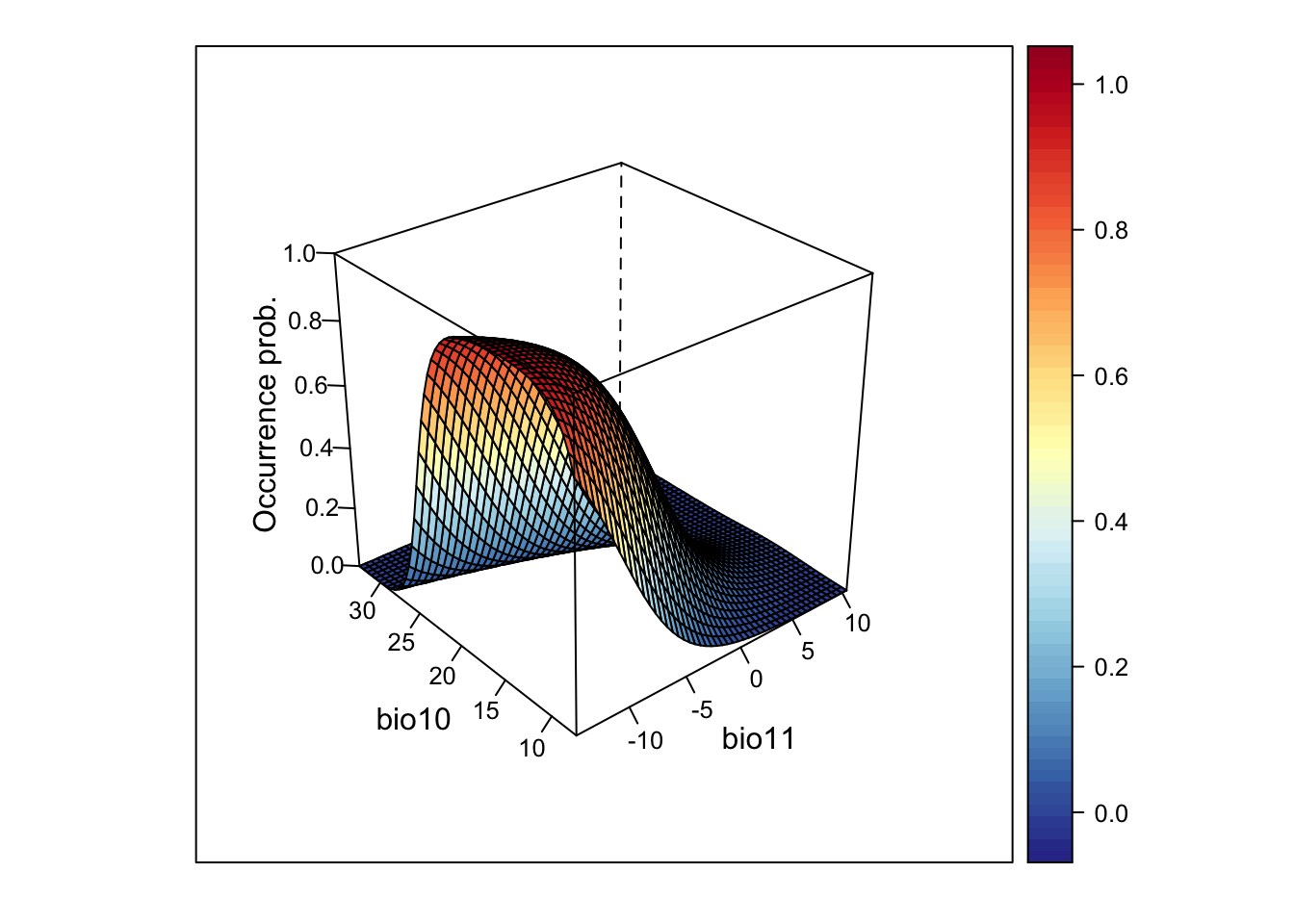

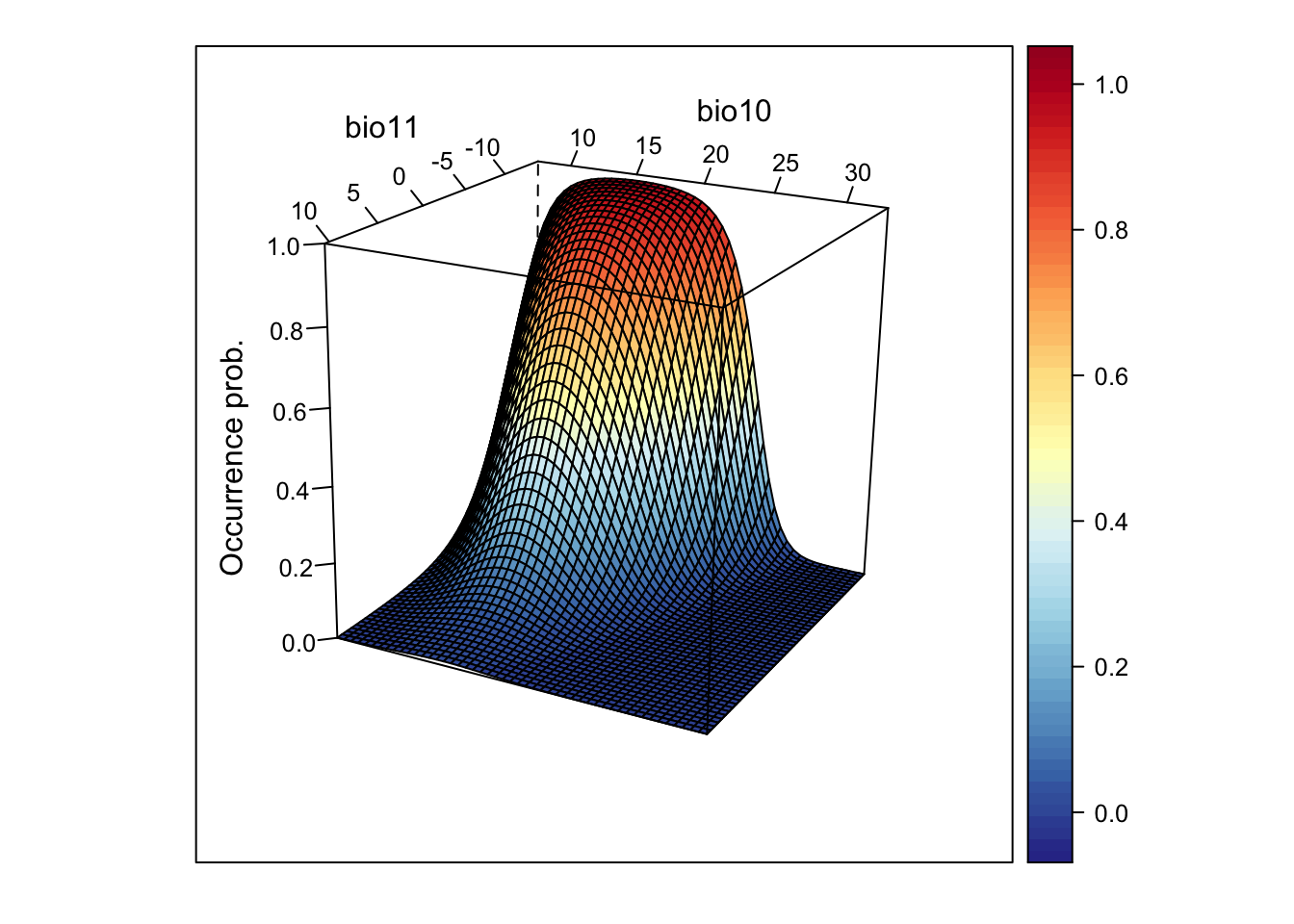

Response curves and response surfaces visualize the (mean) values that a model would predict for an environmental situation, meaning for specific values of the predictor variables. In the current example, we use only two predictors, so we can simply construct a 3D surface that shows the predicted values along the two environmental gradients.

We can get the predicted values using the predict()

function.

# If we do not provide "newdata", then predict() should simply return the fitted values:

head(predict(m_step, type='response'))## 1 2 3 4 5 6

## 0.014108854 0.033726463 0.057589550 0.001026094 0.005222577 0.016571959head(m_step$fitted)## 1 2 3 4 5 6

## 0.014108854 0.033726463 0.057589550 0.001026094 0.005222577 0.016571959If we want to predict model response along the two environmental

gradients, we first need to define a grid that contains all combinations

of the two variables. For this, we use a combination of the

expand.grid() and seq() functions.

# Wwe want to make predictions for all combinations of the two predictor variables

# and along their entire environmental gradients:

xyz <- expand.grid(

# We produce a sequence of environmental values within the predictor ranges:

bio11 = seq(min(lynx_thinned$bio11),max(lynx_thinned$bio11),length=50),

bio10 = seq(min(lynx_thinned$bio10),max(lynx_thinned$bio10),length=50)

)

# Now we can make predictions to this new data frame

xyz$z <- predict(m_step, newdata=xyz, type='response')

summary(xyz)## bio11 bio10 z

## Min. :-14.448 Min. : 7.446 Min. :0.0000000

## 1st Qu.: -8.352 1st Qu.:13.643 1st Qu.:0.0009263

## Median : -2.003 Median :20.099 Median :0.0342420

## Mean : -2.003 Mean :20.099 Mean :0.2331589

## 3rd Qu.: 4.347 3rd Qu.:26.555 3rd Qu.:0.4140808

## Max. : 10.442 Max. :32.752 Max. :0.9832289# As result, we have a 3D data structure and want to visualise this.

# Here, I first set a color palette

library(RColorBrewer)

cls <- colorRampPalette(rev(brewer.pal(11, 'RdYlBu')))(100)

# Finally, we plot the response surface using the wireframe function from the lattice package

library(lattice)

wireframe(z ~ bio11 + bio10, data = xyz, zlab = list("Occurrence prob.", rot=90),

drape = TRUE, col.regions = cls, scales = list(arrows = FALSE), zlim = c(0, 1))

# We can also rotate the axes to better see the surface

wireframe(z ~ bio11 + bio10, data = xyz, zlab = list("Occurrence prob.", rot=90),

drape = TRUE, col.regions = cls, scales = list(arrows = FALSE), zlim = c(0, 1),

screen=list(z = -120, x = -70, y = 3))

This looks very nice. However, if the model gets more complicated and contains more variables than just two, how can we then visualise it?

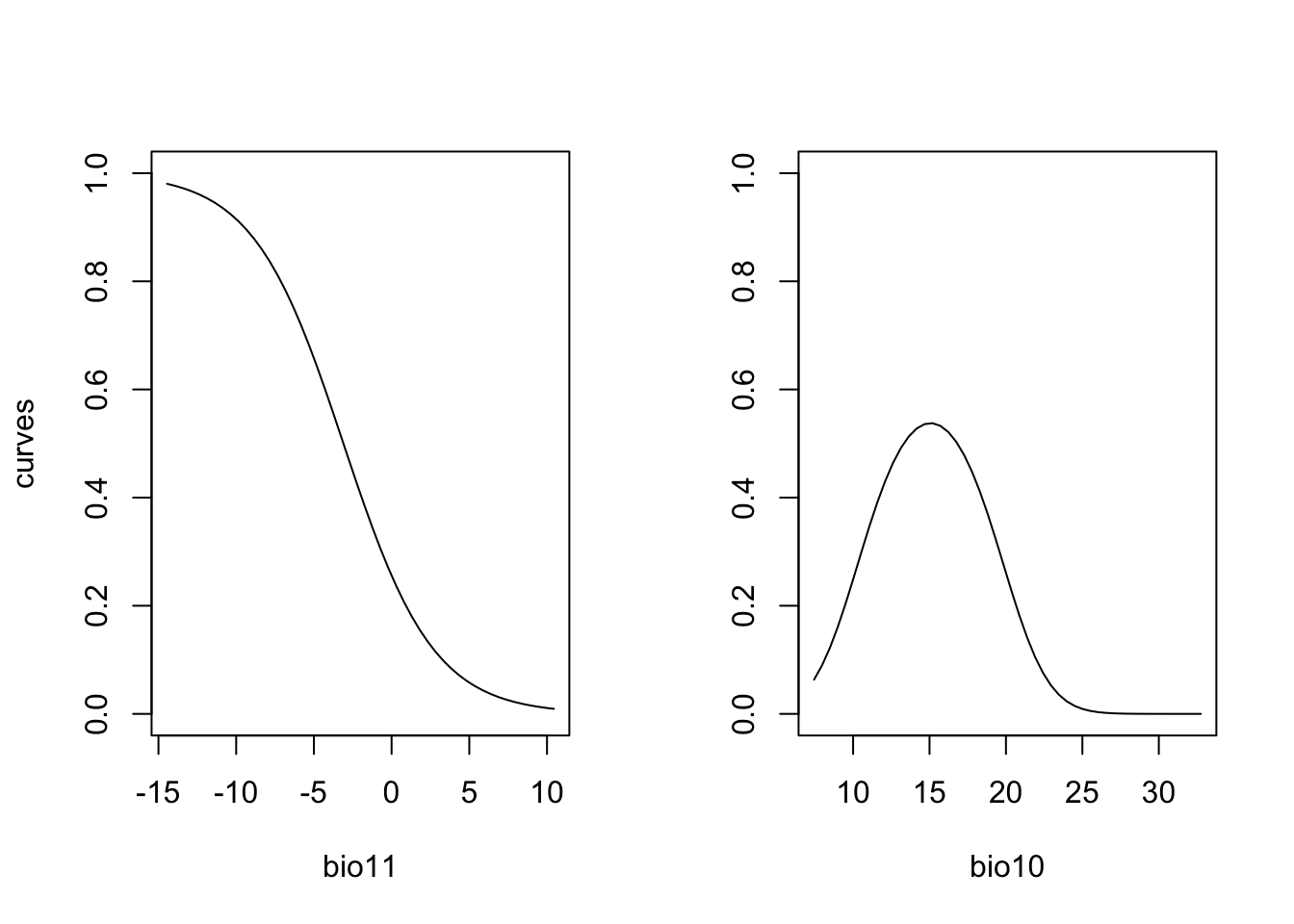

One way is to cut the response surface into slices. Most often, we

simply plot the response along one environmental gradient while keeping

all other gradients constant at their mean. We call this

partial response plots. For simplicity, we can

use the function partial_response() from the

mecofun package for plotting.

library(mecofun)

# Names of our variables:

my_preds <- c('bio11', 'bio10')

# We want two panels next to each other:

par(mfrow=c(1,2))

# Plot the partial responses

partial_response(m_step, predictors = lynx_thinned[,my_preds])

When you compare the response surface and the partial response curves, you see that the latter only give us an incomplete picture of what is going on as they are only “slicing” the response surface in the middle.

Exercise:

Fit another GLM with two different (weakly correlated) predictor

variables and call this object m2. Plot the response

surface, and the partial response curves.

- How do you interpret the fitted relationship?

2.2 Assessing SDM performance

In the previous session, we already learned about the measure “explained deviance” that tells us something about the goodness-of-fit, meaning how well the model fits the data.

expl_deviance(obs = lynx_thinned$occ,

pred = m_step$fitted)## [1] 0.4235096Here, our two-predictor model explains roughly 40% of the variance in the data. However, often we are not only interested in how well our model fits the data but how robust the model is against changes in the input data and, thus, how robust predictions to other places and times might be. This assessment of model performance is often referred to as validation or evaluation. Evaluating the model on the calibration or training data is often referred to as internal validation (“resubstitution”) (Araujo et al. 2005). This generally gives a too optimistic picture of model performance. It is better to evaluate the model using data that have not been used for model fitting. One way is to split the data and only train the model on some proportion of the data and validate it on the hold-out data. Potential procedures are repeated split-samples (e.g. splitting into 70% training and 30% test data and repeat several times) and k-fold cross-validation (e.g. 5-fold or 10-fold), where the data are split into k portions, the kth portion is held out for validation and the procedure is repeated k times. If these folds are stratified in geographic or environmental space, we talk of spatial block cross-validation and environmental block cross-validation (Roberts et al. 2017).

Here, we will use the function crossvalSDM() from the

mecofun package to split our data into 5 folds,

re-calibrate the model using only 4/5 of the original data and predict

the model to the hold-out 1/5 of the data.

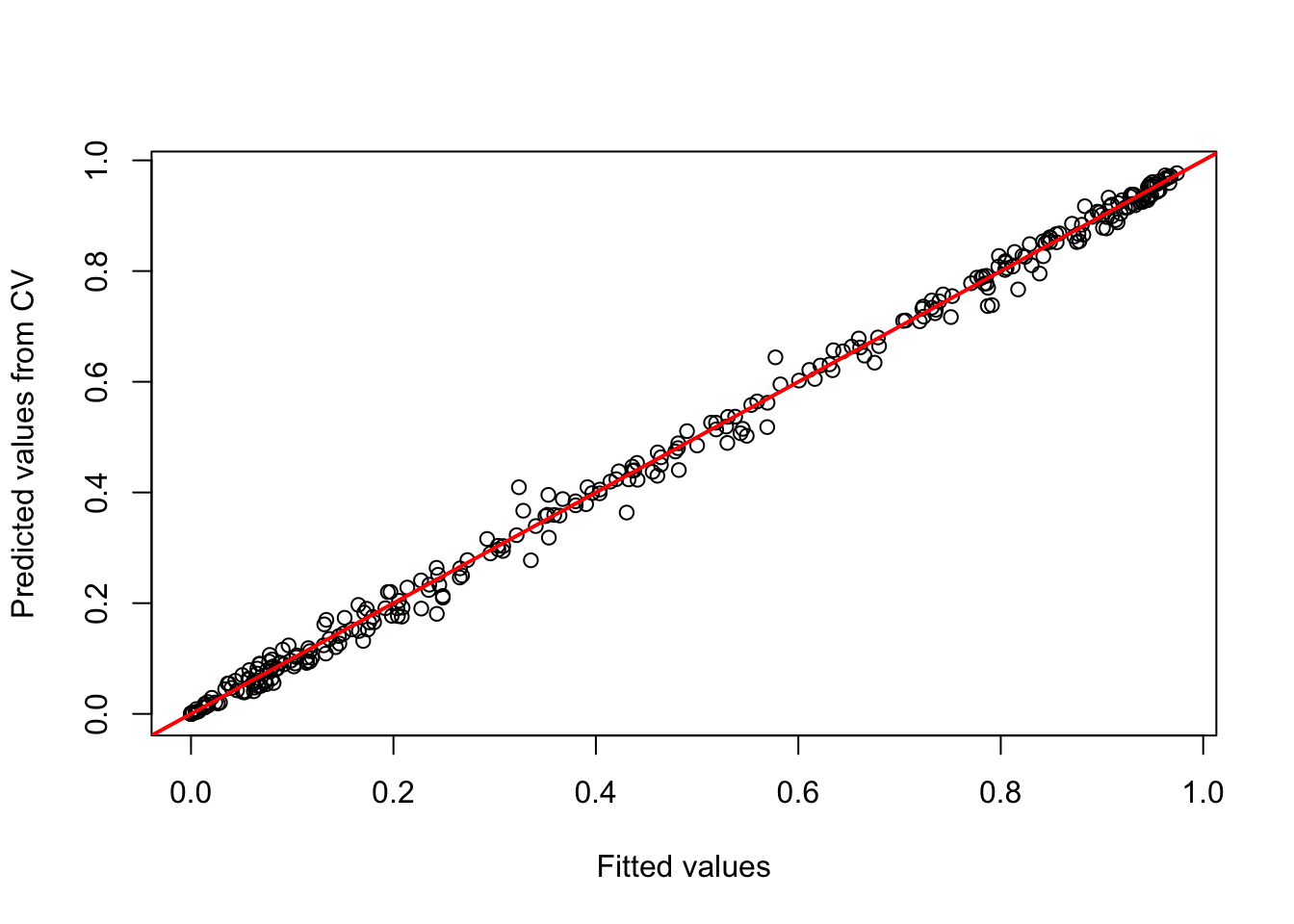

preds_cv <- crossvalSDM(m_step, traindat = lynx_thinned, colname_species = 'occ', colname_pred = my_preds)We receive a numeric vector of cross-validated prediction. Out of curiosity, let us compare the fitted values on the training data and the predictions on the cross-validation data. You should see that the predictions are similar (this is good, otherwise our model would be very sensitive to changes in input data) but not identical - thus, we have basically added some noise.

plot(m_step$fitted.values, preds_cv, xlab='Fitted values', ylab='Predicted values from CV')

abline(0,1,col='red',lwd=2)

We will use these cross-validated predictions to assess model performance.

2.2.1 Threshold-dependent performance measures

Now, we want to know how well our model predicts the observations.

Different measures are available for quantifying this. A lot of these

measures are threshold-dependent. You have probably realised that our

model predicts a continuous response, the probability of occurrence,

while our observations are binary. Many performance measures rely on

comparisons like “How many presence observations does the model

correctly predict as presence”. In order to answer this we first need to

convert the continuous probabilities into binary predictions. Different

thresholds are introduced in Liu et al.

(2005). Most of these are implemented in the

PresenceAbsence package in the

optimal.thresholds function.

library(PresenceAbsence)

# We first prepare our data:

# Prepare cross-validated predictions:

thresh_dat <- data.frame(

ID = seq_len(nrow(lynx_thinned)),

obs = lynx_thinned$occ,

pred = preds_cv)

# Then, we find the optimal thresholds:

(thresh_cv <- PresenceAbsence::optimal.thresholds(DATA= thresh_dat))## Method pred

## 1 Default 0.5000000

## 2 Sens=Spec 0.4600000

## 3 MaxSens+Spec 0.4300000

## 4 MaxKappa 0.4300000

## 5 MaxPCC 0.4300000

## 6 PredPrev=Obs 0.4700000

## 7 ObsPrev 0.4489164

## 8 MeanProb 0.4507287

## 9 MinROCdist 0.4300000

## 10 ReqSens 0.4600000

## 11 ReqSpec 0.4300000

## 12 Cost 0.4300000We can compare observed vs. predicted presences and absences based on

these tresholds. For this, we take our predictions from the

cross-validation. The comparison is easiest illustrated in a confusion

matrix, for example using the function cmx in the

PresenceAbsence package.

Have a look at Liu et al. (2005) to see which thresholds they recommend. Here, we will use the threshold that maximises the sum of sensitivity and specificity (the third row in the thresholds data frame):

(cmx_maxSSS <- PresenceAbsence::cmx(DATA= thresh_dat, threshold=thresh_cv[3,2]))## observed

## predicted 1 0

## 1 133 26

## 0 12 152From such a confusion matrix, we can calculate different evaluation

criteria. For example,

- the proportion of correctly classified test observations

pcc

- the proportion of correctly classified presences, also called

sensitivity or true positive rate

- the proportion of correctly classified absences, also called

specificity or true negative rate

# Proportion of correctly classified observations

PresenceAbsence::pcc(cmx_maxSSS, st.dev=F)## [1] 0.8823529# Sensitivity = true positive rate

PresenceAbsence::sensitivity(cmx_maxSSS, st.dev=F)## [1] 0.9172414# Specificity = true negative rate

PresenceAbsence::specificity(cmx_maxSSS, st.dev=F)## [1] 0.8539326Other measures are Kappa and TSS (the true skill statistic). Allouche, Tsoar, and Kadmon (2006) explain how to calculate these.

# Kappa

PresenceAbsence::Kappa(cmx_maxSSS, st.dev=F)## [1] 0.7643332# True skill statistic

TSS(cmx_maxSSS) ## [1] 0.771174According to Araujo et al. (2005), Kappa>0.4 indicate good predictions. For TSS, we often assume TSS>0.5 to indicate good predictions.

2.2.2 Threshold-independent performance measures

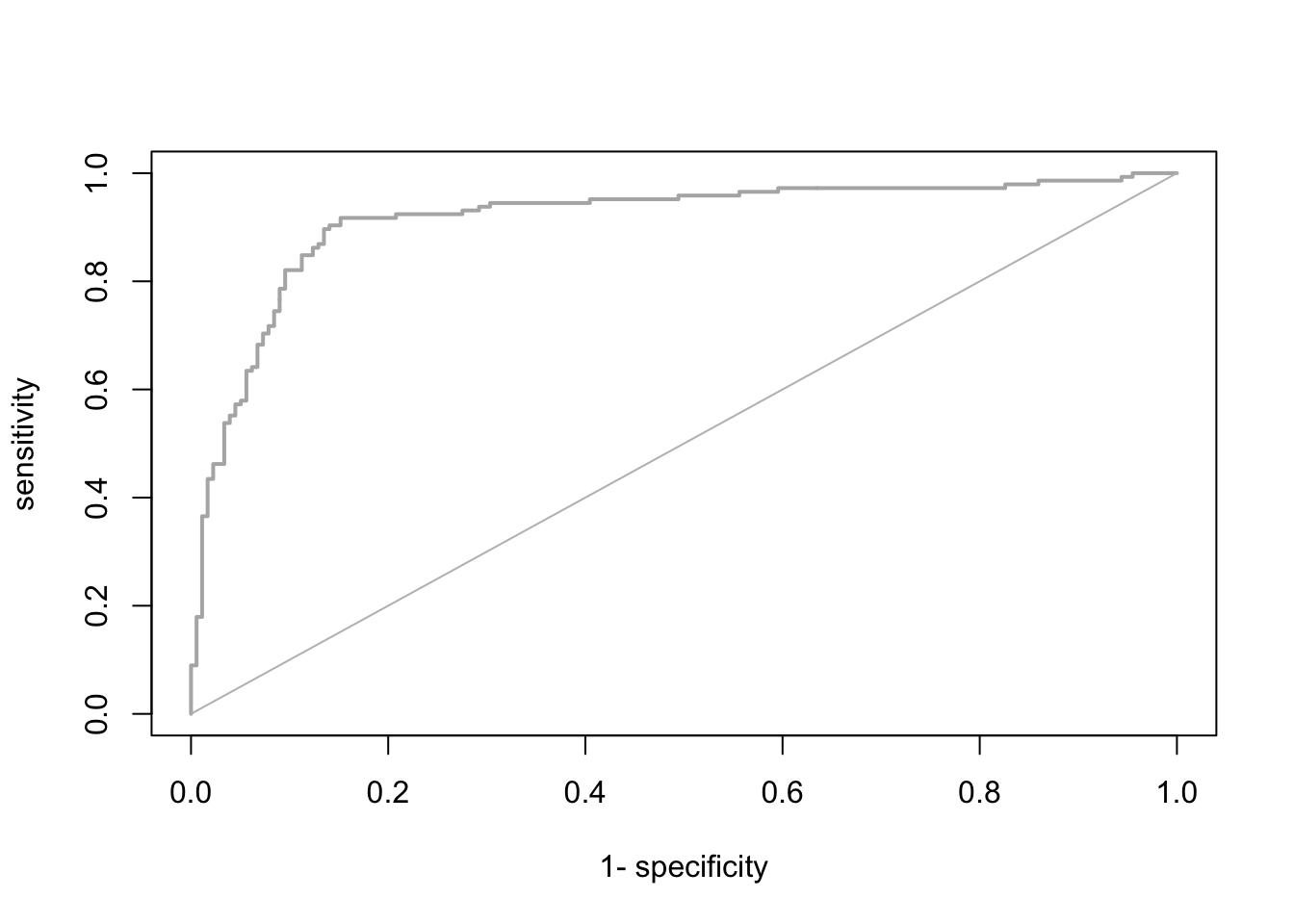

The most common evaluation statistic that avoids thresholding the data is AUC - the area under the receiver-operating characteristic (ROC) curve. ROC curves are generated by calculating sensitivity (true positive rate) and specificity (true negative rate) for many thresholds along the entire range of predicted probabilities. Then, (1-specificity) is plotted on the x-axis against sensitivity on the y axis. The area under this curve is called the AUC. The further the generated curve deviates from the 1:1 line towards the upper-left corner, the better the model predicts presence/absence of a species. If we would take a random presence and a random absence from our observations and make predictions, than AUC can be interpeted as the chance of assigning a higher predicted occurrence probability to the presence compared to the absence point. Typically, we regard AUC>0.7 as indicating fair predictions (Araujo et al. 2005).

library(AUC)

# Let's have a look a the ROC curve:

roc_cv <- roc(preds_cv, as.factor(lynx_thinned$occ))

plot(roc_cv, col = "grey70", lwd = 2)

# Compute the AUC:

AUC::auc(roc_cv)## [1] 0.9140643It seems our model is performing pretty well on hold-out data. We can thus attempt making predictions in space and time.

Please be aware that many packages contain functions for evaluating

SDMs. As always you have to find your own way. Here, I provide merely

examples. To ease further performance assessments during this course,

the mecofun package contains a function

evalSDM() that computes the here-mentioned performance

measures. By default, this function uses the MaxSens+Spec

threshold.

evalSDM(lynx_thinned$occ, preds_cv)## AUC TSS Kappa Sens Spec PCC D2 thresh

## 1 0.9140643 0.771174 0.7643332 0.9172414 0.8539326 0.8823529 0.3980163 0.43Exercise:

Use the m2 model from the previous exercise and assess

the TSS, sensitivity, specificity, and AUC.

- Which model (

m1orm2) has a higher predictive performance?

3 Spatio-temporal predictions

We have already learned how to make predictions using the function

predict() and also using the argument newdata.

All we need for transferring our model to other places and times are the

respective environmental variables.

3.1 Prepare the environmental layers

We have already learned how to download climate data from WorldClim using the

geodata package.

Download the current climate layers:

library(geodata)

# rough European extent

extent_eur <- c(-15,45,35,72)

# Please note that you have to set download=T if you haven't downloaded the data before:

bio_curr <- geodata::worldclim_global(var = 'bio', res = 10, download = F, path = 'data')

# Crop data to European extent

bio_curr <- terra::crop(bio_curr, extent_eur)

# Aggregate to 1° resolution

bio_curr <- terra::aggregate(bio_curr, 6)

# Change names such that they match the names in our lynx data set

names(bio_curr) <- c(paste0('bio0',1:9), paste0('bio',10:19))Download the future climate layers. More information on the model abbreviations and the available SSPs can be found here: https://www.worldclim.org/data/cmip6/cmip6_clim10m.html.

# Please note that you have to set download=T if you haven't downloaded the data before:

bio_fut <- geodata::cmip6_world(model='ACCESS-ESM1-5', ssp='245', time='2041-2060', var='bioc', download=F, res=10, path='data')

# Crop data to European extent

bio_fut <- terra::crop(bio_fut, extent_eur)

# Aggregate to 1° resolution

bio_fut <- terra::aggregate(bio_fut, 6)3.2 Make predictions to the environmental layers

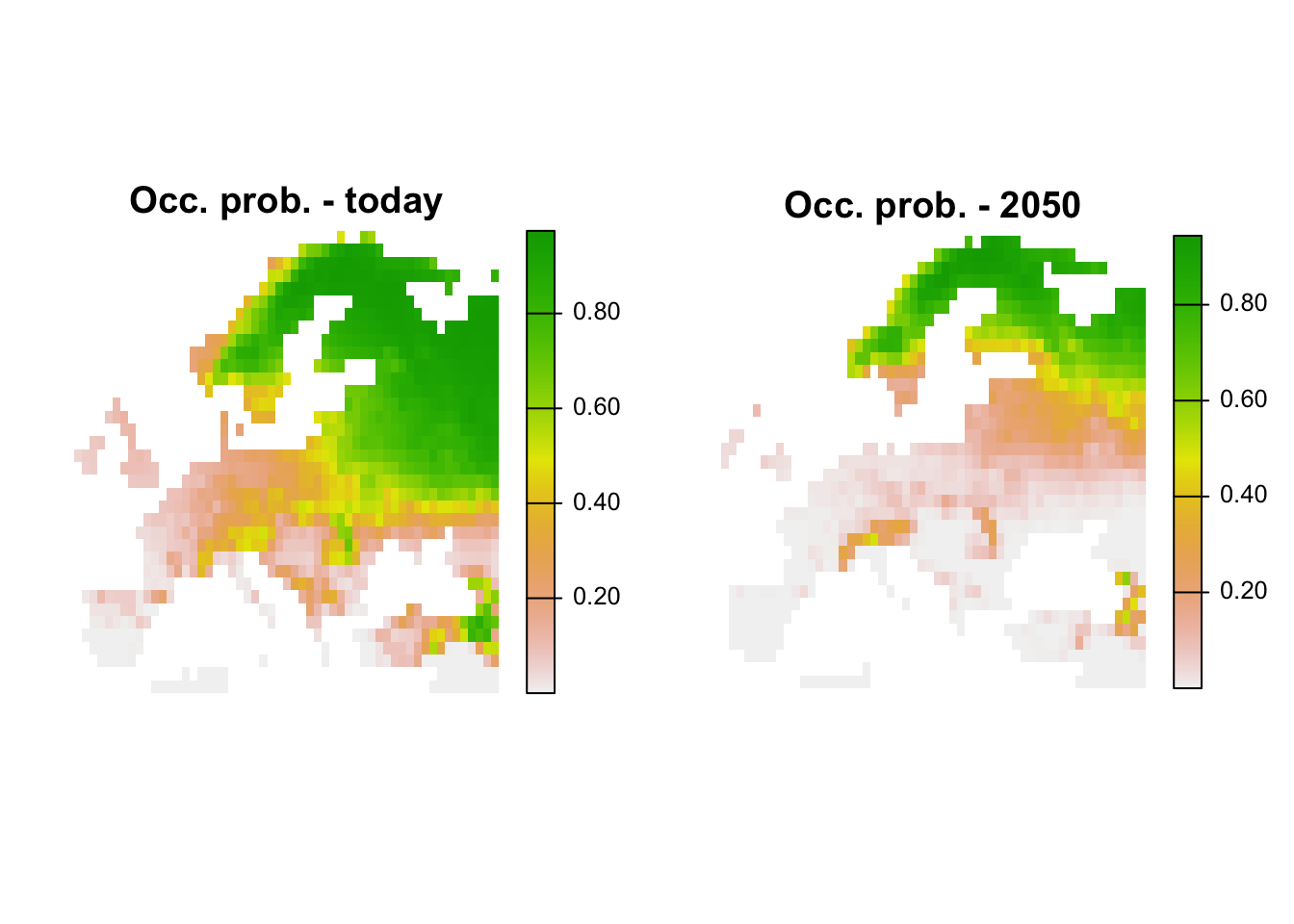

It is now straight forward to make continuous predictions to the current and the future climate:

# Prepare data frames

bio_curr_df <- data.frame(crds(bio_curr),as.points(bio_curr))

bio_fut_df <- data.frame(crds(bio_fut),as.points(bio_fut))

# Make continuous predictions:

bio_curr_df$pred_glm <- predict(m_step, newdata= bio_curr_df, type="response")

bio_fut_df$pred_glm <- predict(m_step, newdata= bio_fut_df, type="response")Of course, we can also plot the predictions:

par(mfrow=c(1,2))

# Make raster of predictions to current environment:

r_pred_curr <- terra::rast(bio_curr_df[,c('x','y','pred_glm')], type='xyz', crs=crs(bio_curr))

plot(r_pred_curr, axes=F, main='Occ. prob. - today')

# Make raster stack of predictions to future environment:

r_pred_fut <- terra::rast(bio_fut_df[,c('x','y','pred_glm')], type='xyz', crs=crs(bio_curr))

plot(r_pred_fut, axes=F, main='Occ. prob. - 2050')

We see that lynx is predicted to decline under the chosen climate scenario.

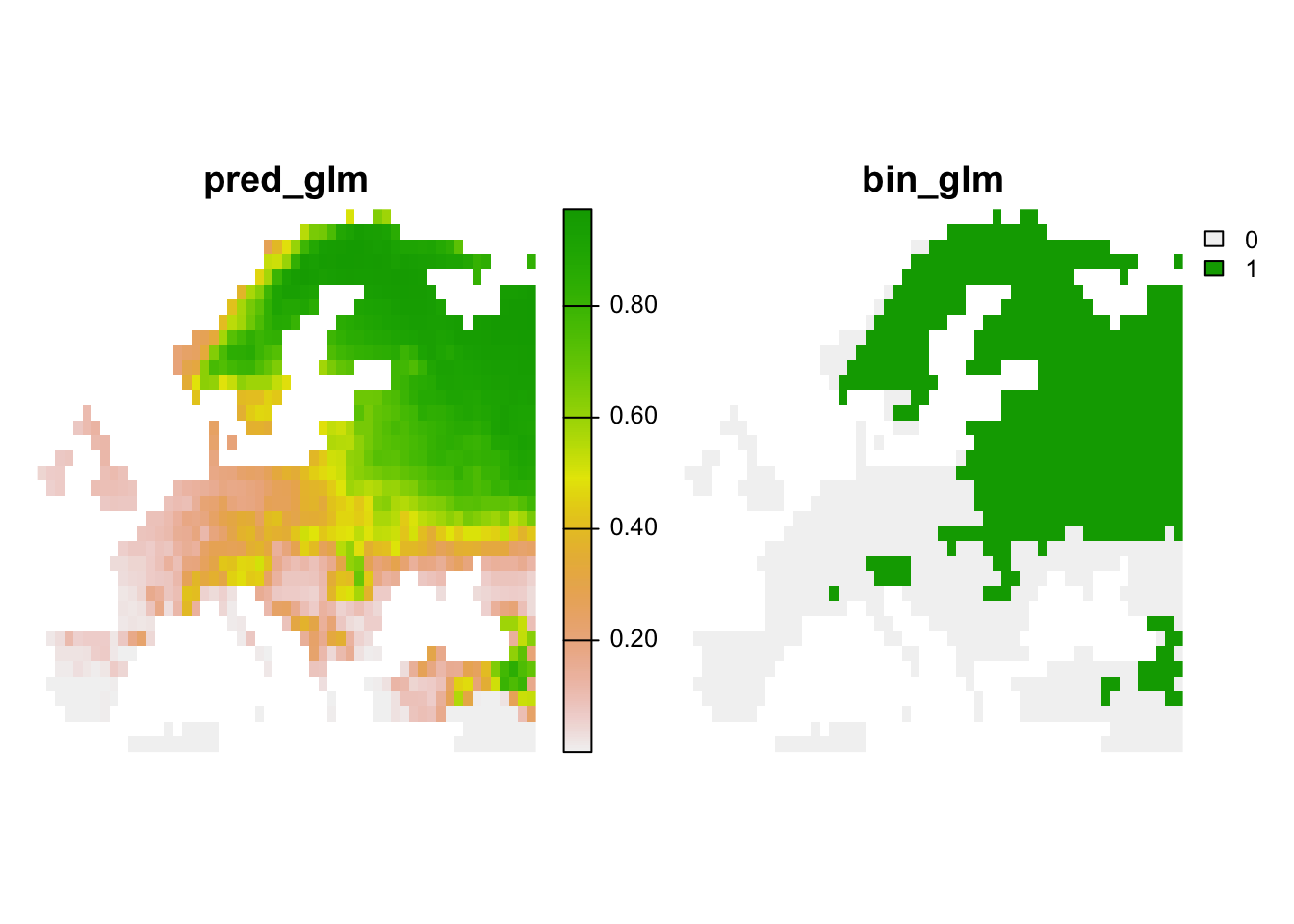

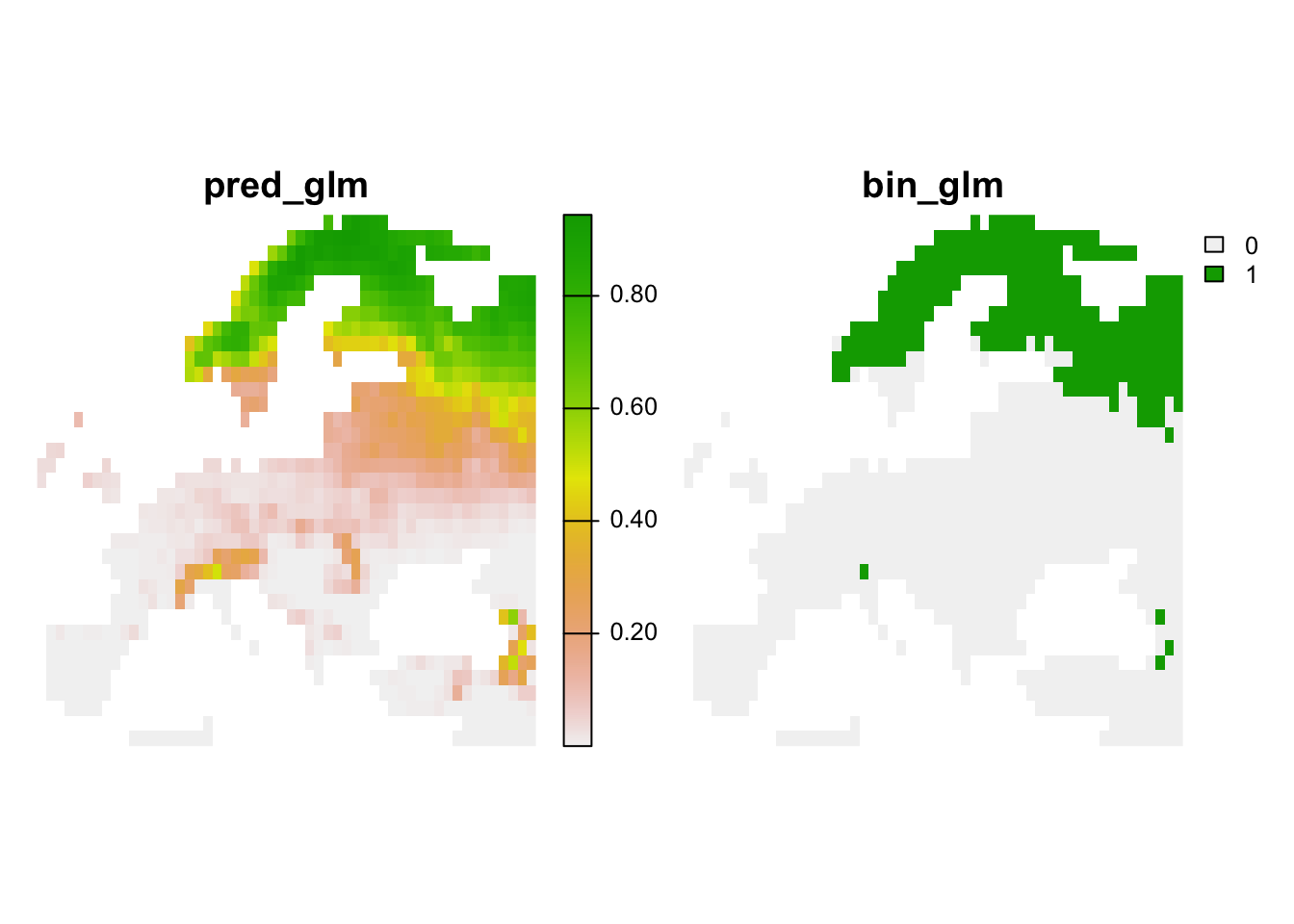

Lastly, we can also translate the continuous predictions into binary predictions and plot the resulting maps.

# Make binary predictions:

bio_curr_df$bin_glm <- ifelse(bio_curr_df$pred_glm >= thresh_cv[3,2], 1, 0)

bio_fut_df$bin_glm <- ifelse(bio_fut_df$pred_glm >= thresh_cv[3,2], 1, 0)

# Make raster stack of predictions to current environment:

r_pred_curr <- terra::rast(bio_curr_df[,c('x','y','pred_glm','bin_glm')], type='xyz', crs=crs(bio_curr))

plot(r_pred_curr, axes=F)

# Make raster stack of predictions to future environment:

r_pred_fut <- terra::rast(bio_fut_df[,c('x','y','pred_glm','bin_glm')], type='xyz', crs=crs(bio_curr))

plot(r_pred_fut, axes=F)

4 Homework prep

For the homework, you will need several objects that you should not forget to save.

# Write terra objects to file:

terra::writeRaster(bio_curr, 'data/bio_curr.tif')

terra::writeRaster(bio_fut, 'data/bio_fut.tif')

# Save other (non-terra) objects from the workspace:

save(m_step, my_preds, preds_cv, file='data/5_SDM_eval.RData')As homework, solve all the exercises in the blue boxes.

Exercise:

In the previous exercise, you estimated another (full) model with different (or more) predictors. Use this model now and make predictions to current and future climates.

- Which model predicts a larger area under current climate?

- Which model predicts a larger difference between current and future climate?